How to Implement Stanford Named Entity Recognition Model

- May 26, 2018

- Posted by: FOYI

- Categories: Blog, How to Guide

What is Named Entity Recognition(NER)?

The process of detecting and classifying proper names mentioned in a text can be defined as Named Entity Recognition (NER). This is generally the first step in most of the Information Extraction (IE) tasks of Natural Language Processing.

Business Use cases for NER

There is a need for NER across multiple domains. Below are a few sample business use cases for your reference.

1. Investment research:

To identify the various announcements of the companies, people’s reaction towards them and its impact on the stock prices, one needs to identify people and organization names in the text

2. Chatbots in multiple domains:

To identify places and dates for booking hotel rooms, air tickets etc.,

3. Insurance domain:

Identify and mask people’s names in the feedback forms before analysing. This is needed for being regulatory compliant(example: HIPPA)

Installation Prerequisites

1. Download Stanford NER from http://nlp.stanford.edu/software/stanford-ner-2015-04-20.zip

2. Unzip the zipped folder and save in a drive.

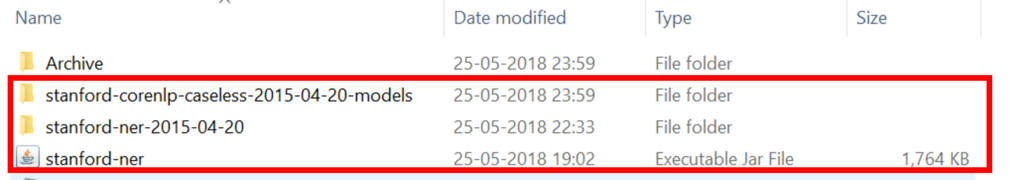

3. Copy the stanford-ner.jar from the folder and save it just outside the folder as shown in the image below.

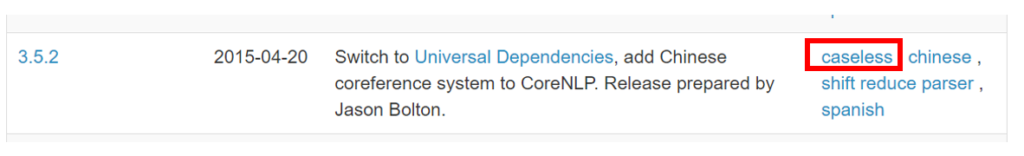

4. Download the caseless models from https://stanfordnlp.github.io/CoreNLP/history.html by clicking on caseless as given below. The caseless models help in identifying named entities even when they are not capitalized as required by formal grammar rules.

5. Save the folder in the same location as the Stanford NER folder for ease of access. Now, run the following python code.

#Import all the required libraries.

import os

from nltk.tag import StanfordNERTagger

import pandas as pd

#Set environmental variables programmatically.

#Set the classpath to the path where the jar file is located

os.environ[‘CLASSPATH’] = “<path to the file>/stanford-ner-2015-04-20/stanford-ner.jar”

#Set the Stanford models to the path where the models are stored

os.environ[‘STANFORD_MODELS’] = ‘<path to the file>/stanford-corenlp-caseless-2015-04-20-models/edu/stanford/nlp/models/ner’

#Set the java jdk path

java_path = “C:/Program Files/Java/jdk1.8.0_161/bin/java.exe”

os.environ[‘JAVAHOME’] = java_path

#Set the path to the model that you would like to use

stanford_classifier = ‘<path to the file>/stanford-corenlp-caseless-2015-04-20-models/edu/stanford/nlp/models/ner/english.all.3class.caseless.distsim.crf.ser.gz’

#Build NER tagger object

st = StanfordNERTagger(stanford_classifier)

#A sample text for NER tagging

text = ‘srinivas ramanujan went to the united kingdom. There he studied at cambridge university.’

#Tag the sentence and print output

tagged = st.tag(str(text).split())

print(tagged)

Output:

[(u’srinivas’, u’PERSON’),

(u’ramanujan’, u’PERSON’),

(u’went’, u’O’),

(u’to’, u’O’),

(u’the’, u’O’),

(u’united’, u’LOCATION’),

(u’kingdom.’, u’LOCATION’),

(u’There’, u’O’),

(u’he’, u’O’),

(u’studied’, u’O’),

(u’at’, u’O’),

(u’cambridge’, u’ORGANIZATION’),

(u’university’, u’ORGANIZATION’)]

Additional Reading

Stanford NER algorithm leverages a general implementation of linear chain Conditional Random Field sequence models. CRFs seem very similar to Hidden Markov Model but are very different. Below are some key points to note about the CRFs in general.

• It is a discriminative model unlike the HMM model and thus models the conditional probability.

• Does not assume independence of features unlike the HMM model. This means that the current word, previous word, next word are all considered for model as features.

• Relative to HMM or Max ent Markov Models, CRFs are the slowest.

Note: This article explains the implementation of StanfordNER algorithm for research purposes and does not promote it for commercial purposes. For any questions on commercial aspects of implementing this algorithm, please contact authors of the algorithm.

References

• Jenny Rose Finkel, Trond Grenager, and Christopher Manning. 2005. Incorporating Non-local Information into Information Extraction Systems by Gibbs Sampling. Proceedings of the 43nd Annual Meeting of the Association for Computational Linguistics (ACL 2005), pp. 363-370

• An introduction to conditional random fields

• Introduction to Stanford NER package

Contact Us for an introductory call to understand how we can help you.